Private AI Cloud for On-Demand Audio

Project Objective: To create a cost-effective and private audiobook solution for my personal e-book library, I engineered a distributed Text-to-Speech (TTS) pipeline. This system leverages local AI processing to transform text-based content (PDFs, EPUBs) into high-quality audio, accessible securely from any location.

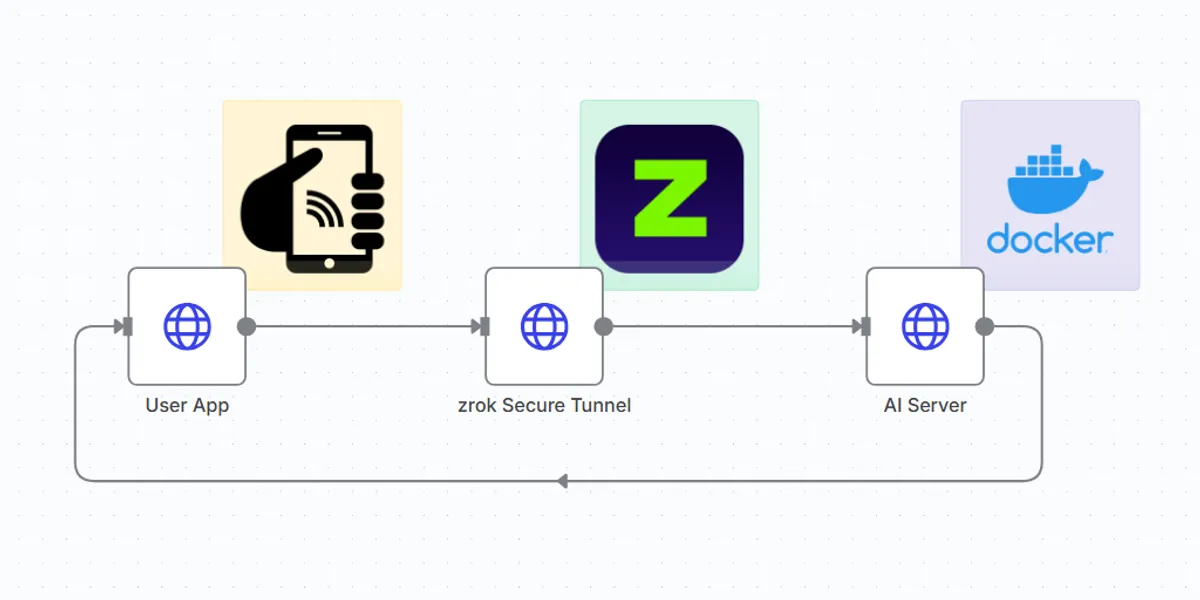

The architecture is designed as a classic client-server model, prioritizing security, flexibility, and cost control.

The End-to-End Process:

- Client Request: Using the Speech Central mobile app, I select a local e-book. The app is configured to act as a client pointing to my personal API endpoint.

- Secure Tunneling: The request is sent to a public URL provided by zrok, a secure tunneling service. Zrok creates a private overlay network, securely routing the request to the designated service on my home server without requiring complex firewall configurations or exposing my home IP address.

- Local Processing: The request is received by one of two locally hosted TTS servers running in my home lab:

- Engine 1 (openai-edge-tts): Utilizes the high-quality, efficient engine from Microsoft Edge for fast and natural-sounding speech.🎤

- Engine 2 (openaied-speech): Provides an OpenAI-compatible API endpoint for local generation, allowing for experimentation and offline capability.

- Audio Streaming: The selected engine processes the text and streams the generated audio data back through the zrok tunnel to the Speech Central app on my device for real-time playback.

This project was not just about connecting tools; it was about making deliberate architectural choices to achieve specific outcomes.

- Self-Hosting for Cost Control & Privacy: By running the TTS engines locally, I completely avoid the variable, per-character costs associated with cloud-based AI services (like OpenAI, Google TTS). This model provides predictable, near-zero operational costs. Furthermore, all content is processed on my own hardware, ensuring 100% data privacy.

- Dual-Engine Flexibility: Maintaining two separate TTS engines provides redundancy and allows for A/B testing. I can choose the optimal engine based on the desired balance of speech quality, processing speed, and character.

- Secure Remote Access: Implementing zrok was a key security decision. It provides robust, encrypted access to a home-lab service without the risks of traditional port forwarding, demonstrating an understanding of modern secure networking practices.

Conclusion: This project successfully created a personal, on-demand audiobook service that is both more private and significantly more cost-effective than commercial alternatives. It serves as a strong proof-of-concept for building and securely deploying private AI services.