GenAI Art: A Case Study on Precision Control & Branded Marketing

As a UX Engineer, I'm driven by the challenge of making powerful technology truly useful. When Stable Diffusion emerged, I saw immense potential but also a common user frustration: a lack of precise control. My goal was to move beyond simple text-to-image prompting and engineer workflows for specific, targeted outcomes.

After evaluating the landscape of open-source tools—from the user-friendly Fooocus to the standard A1111 web UI—I chose ComfyUI for its node-based, granular architecture, which was essential for building the custom pipelines I envisioned. To bridge the gap between technical complexity and a creative workflow, I integrated ComfyUI with the open-source image editor Krita via a community-built plugin. This setup became my testbed for two distinct challenges: creating high-engagement marketing assets and performing high-fidelity digital edits.

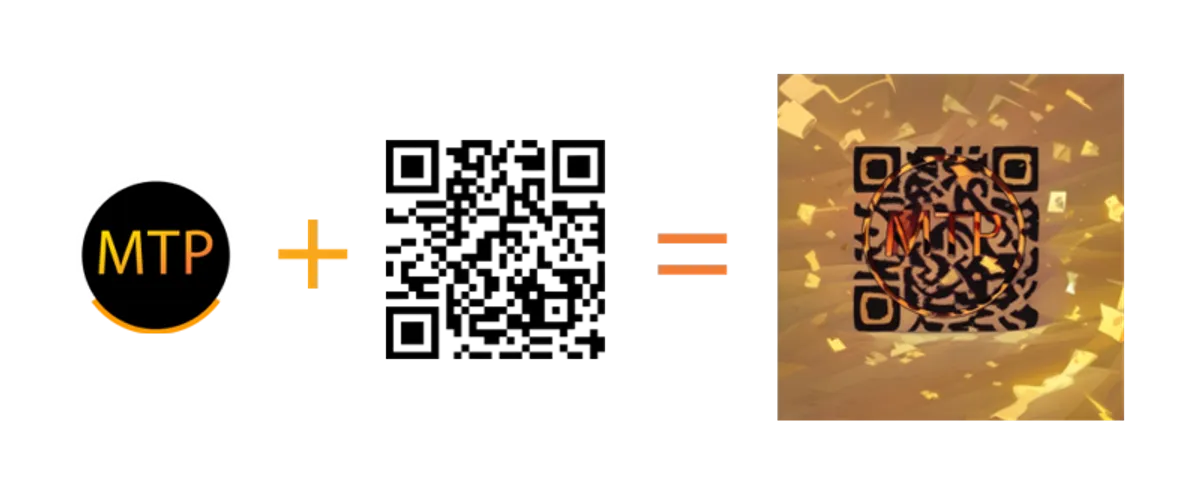

QR codes are a ubiquitous but often ignored marketing tool. Their generic appearance fails to capture user curiosity, representing a missed opportunity for brand engagement. This project explored a solution: creating visually engaging, branded QR codes that remain fully scannable. The hypothesis was that an artistic QR code could significantly increase user engagement and reinforce brand identity.

To achieve this, I designed a pipeline in ComfyUI using the ControlNet QR Code Monster v2 model. The workflow was as follows:

- Input: A standard, functional QR code was generated using an open-source library.

- Control: This code was fed into the ControlNet to serve as a non-negotiable structural map for the AI.

- Creative Guidance: I then used text prompts and our 'MTurk Pro' logo as an image prompt to guide the artistic generation within the QR code's functional boundaries.

- Output: The result is a scannable QR code that artistically integrates our brand's visual elements.

The core pipeline: combining a functional QR code with brand assets.

This proof-of-concept successfully demonstrates the viability of the workflow. The true potential, however, lies in automation and scaling. The next evolution of this project is to build a closed-loop system:

- The AI generates a batch of designs based on brand assets.

- An automated validator, using a library like jsQR, tests each code for scannability.

- Only the successful, on-brand designs are approved and cataloged.

This automated QA process would make it possible to generate thousands of unique, functional, and on-brand creative assets at scale. For rapid, high-volume inference, this entire pipeline could be deployed on a remote GPU service like RunPod.

A common challenge in digital asset modification is performing targeted, photorealistic edits without corrupting the original art style. Standard AI in-painting often over-modifies an image, altering faces, textures, and lighting in undesirable ways. This project aimed to develop a high-fidelity workflow for subtle, controlled modifications.

The objective was to add a colored highlight to a character's hair—a simple concept that tested the limits of AI precision.

Using a human-in-the-loop process, I first created a precise layer mask in Krita, isolating only the character's hair. This mask, along with the original image, was passed to ComfyUI. By carefully tuning the denoising strength, I instructed the AI to only "re-imagine" the masked area with the new color prompt.

The final image demonstrates a seamless highlight that respects the original artwork's lighting, texture, and orange background reflections. This human-in-the-loop process also allowed me to selectively incorporate other AI-suggested enhancements while rejecting those that didn’t fit, maintaining full creative direction. It validates AI not as an unpredictable generator, but as a precision tool within a professional workflow.

These projects are just the beginning. My work with Stable Diffusion, ComfyUI, and emerging models like FLUX.1, HunyuanVideo, and Wan2.1 demonstrates my ability to not just use AI, but to architect and build custom solutions that solve specific creative and business problems.

I am passionate about diving deep into the technical stack—from evaluating models to engineering automated pipelines—to bridge the gap between human intention and machine capability. I am actively seeking opportunities where I can apply this builder's mindset to create the next generation of innovative, user-centric AI tools and experiences.